Artificial Intelligence in customer care

This blog describes how Expertflow views Artificial intelligence in customer care and which role Expertflow products are currently or will looking forward provide in that context.

The primary use of Artificial Intelligence in customer care is to

- recognize what is being said or written

- predict what the next appropriate action should be, and either automatically respond to the client or assist the human contact center agent

The first point is provided with solutions such as Optical Character Recognition (OCR), Speech Recognition (ASR) and Natural Language Processing (NLP), the later by dialog engines that either work with human scripted dialogues or machine-learning dialogues.

Expertflow believes that AI algorithms that provide such functionalities are rapidly evolving and are replaceable.

The common requirement for any AI engine and the actual treasure is tagged data for a particular customer context, which is very labor-intensive. Tagged company-owned data in the form of tagged speech (transcribed text), tagged messages (intents and entities), and tagged dialog sequences is the treasure that helps you uncover. We are the mining company of AI – we don’t provide data, we don’t process data, but we give you access to it.

AI use cases

AI technologies can be used in several customer care situations, the main ones being described here.

- NLU to extract intents and entities from written text (chat/ email/ documents) or speech

- Dialogue management determines the next appropriate action or response. Dialogue management maintains a conversation state and context. It can also capture slots to be filled that are required to fulfill a certain action. Dialogue management can also interact with a CRM to extract more information about a customer or his past interactions, and use this information to drive the conversation.

- Speech technologies, such as ASR, to recognize utterances of a speaker, or Voice Biometrics, to identify that a voice fingerprint matches a speaker’s registered voice.

- can also be used to do automated screen-popup on the customer advisor tablet or call center agent desktop to bring up the right information on the screen without any human intervention – just based on speech.

- Indicate to a call center agent in real-time the probability that the speaker is who he pretends to be

- Image and Optical Character recognition – Extract text and content of images from printed documents,

- Data Analytics – Recognize patterns in customer behavior, generate customer profiles and clusters

Alternatives to AI

The above-mentioned goals can also be achieved without neural networks. These are typically grammar-based/ scripted approaches. Some technologies are Hidden Markov Chains, Lemmatizers, TF-IDF, Bag-Of-Words. For the conversation part, this corresponds to scripted (such as in an IVR script) engines. They don’t require large amounts of data, bring immediate results, but can’t learn from real ongoing conversations, so the success rate eventually tapers off.

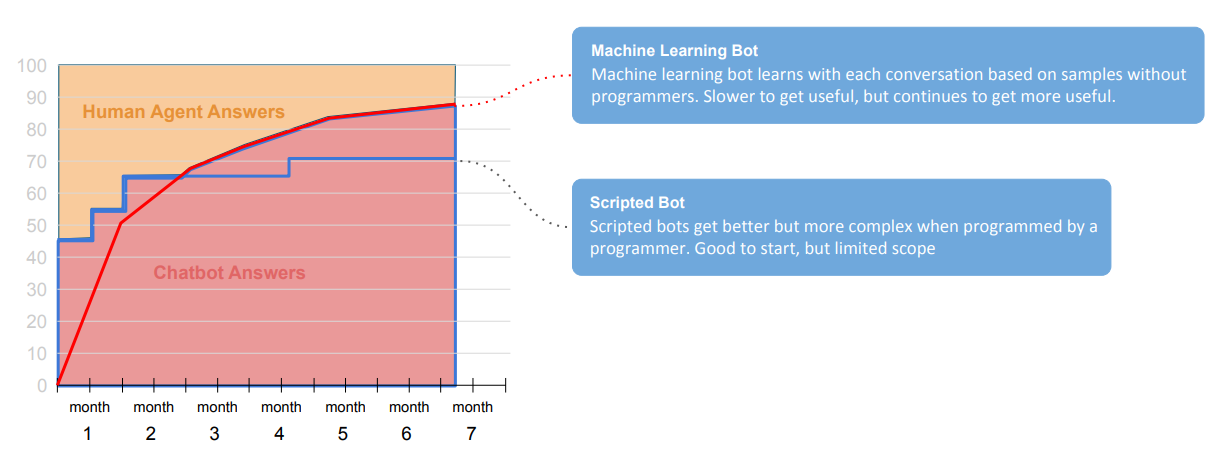

The illustrative diagram below shows the typical success rate of two chatbots dialog engines using either an AI or an alternative approach: One using a purely scripted approach (blue), one using a pure machine learning approach (red). The scripted chatbot will immediately achieve positive results but will struggle to learn in the long run. machine-learning-based bots will have disappointing initial results but then continue to learn over time with more samples. We see that by combining the results of both approaches (yellow), we will achieve both immediate results and long-term learning.

The diagram illustrates that initially, 50% of all chat conversations will require an escalation to a human agent (green). This percentage will then decrease over time, as the chatbot’s answer capabilities (yellow) increase.

A promising approach is to combine at least three elements, all working in parallel:

- a scripted/ grammar based engine for immediate results

- a machine-learning engine for ongoing learning

- a human agent to capture any situation that is not recognized by the algorithms

Expertflow CIM normalizes inputs and outputs of AI and other signal extraction engines and blends these with human agents.

Algorithms are replaceable, data is not

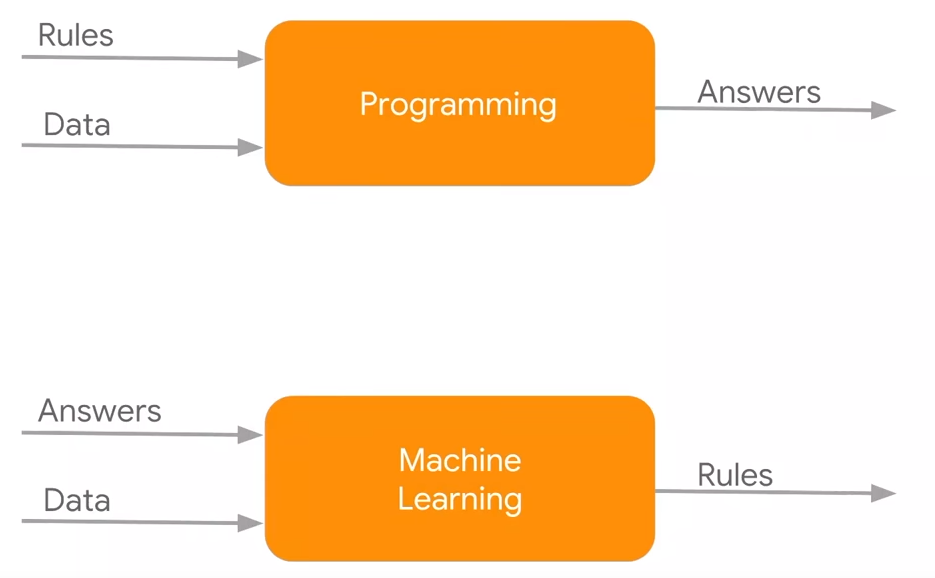

AI systems take a labeled data vector as an input and then create a model that will allow labeling/ classification of future input. Input can, for example, be speech or text utterances, and labels could be recognized entities or intents. Whereas in traditional programming, one applied known rules do data to get answers, AI takes data and answers (= labels to the data) to find a model/ rules.

Diagram (Google I/O ‘18)

Most of the effort when using AI work is related to data collection and labeling. Building a neural network (NN) model takes a few lines of code. Training the NN, evaluating it and doing predictions is taking one line of code each:

One-line code sample on training a NN in KERAS:

model.fit(data, labels, epochs=10, batch_size=32)

One-line code sample on predicting from a NN with KERAS:

prediction = predict(self, x, batch_size=None, verbose=0, steps=None)

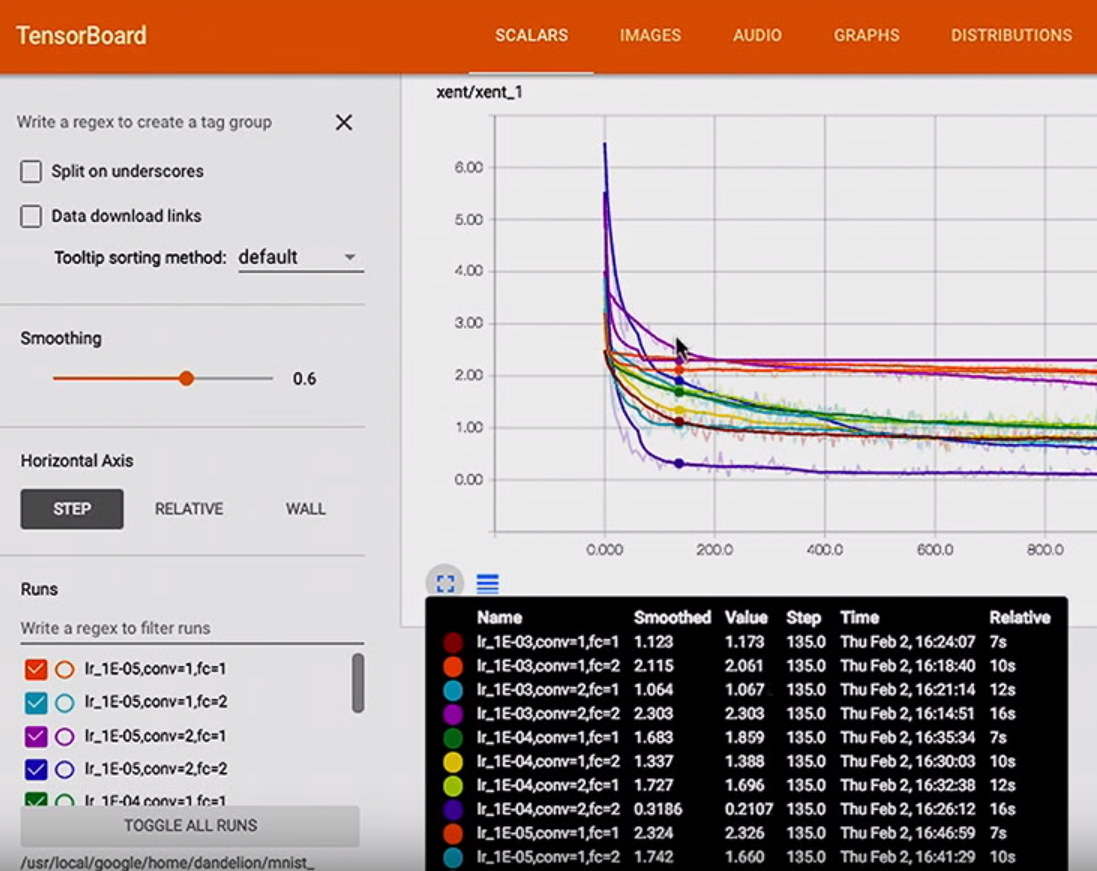

Tools such as Tensorboard allow you to test multiple types of NN’s and models on your data with changing hyperparameters (number of layers, training rates, convolutions,…), in order to empirically find the best NN for your data:

For most use cases that are interesting for contact centers (speech recognition, phoneme recognition, voice biometrics, NLU, customer profiling,…), there exist good Github images with Jupyter/ Python Models of models that are appropriate for a certain problem, using open-source NN libraries such as KERAS or Tensorflow. Combining this with the fact that the best models are models that are built with your own data, and that new scientific publications occur very frequently means that one can expect to end up with the best results not by using static commercial algorithms and software, but to be ready to continuously improve the model, and to invest effort instead to capture and label data, as well as to think on how to deploy these gained models into a productive environment.

So the right question is not “what is the best algorithm?” (as this will continuously change), but “how do I extract my data, and what do I do with predictions?”.

Training data with sensitive data

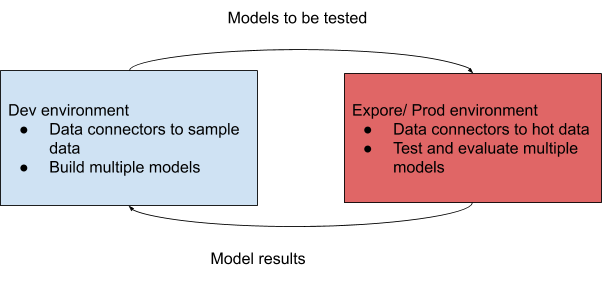

Customer data (on the right in the below diagram) is sensitive, and developers (or Expertflow) should not have any access to the same. Developers can build data connectors for data conversion and build promising models in a dev environment, using a small set of test data. These connectors and models can then be exported to a production environment, where multiple models can then be tested and evaluated. The results of the same are then returned to the dev environment:

There are some situations where it is necessary for debugging to visualize data on prod data, especially if results from prod and dev data differ widely. One approach to this might be to use data sterilization as mentioned later.

Expertflow extracts data and embeds your models in a contact center

“Productive environment” in our environment means a contact center with human agents, routing, CRM,…

This is where Expertflow provides expertise, as the way and formats of how data is stored are very similar amongst our clients: We help companies extract data (speech and screen recordings, chat history/ transcripts, email history, document storage, CRM information, Call center data,…). We enrich the data, for example with call tags and customer data. We then convert data (either in a static format or as a stream) in an NN-optimized format ( for example in TensorFlow-optimized TFRecord), which allows you to easily test and build your own models.

We then use your best model (which can evolve over time) to assist humans (= contact center agents) in the contact center or to fully automate certain services (self-service/ web-service). So our approach is that your predictive models will gradually learn and improve over time.

Company-specific data sets increase performance

Companies usually have their own slang, vocabulary, use case and dialogues. Models that are trained with actual real samples from that company will always outperform a general-purpose system.

This also means that the best and cheapest way to increase the performance of a NN is to feed it more data specific to the actual use case. Not making use of this treasure of data is a waste.

Expertflow believes that a key to a long-term successful AI strategy is to store and curate (tag) your own data and models, whatever the tools are used. Data should be available/ exportable in a form that can be shared with any AI solution there might be.

Extract training data with agent tags

What the human contact center agent does can be a tag for what the customer said or wrote. These can be the following:

- An answer written or selected by the agent in a chat

- call tags to classify an entire call

- Capturing actions that agents take in the CRM or agent guidance/ forms

- Capturing the agent’s browser actions via DOM events

This tagging information can be used to tag

- a specific chat message

- speech

At the very least, these initial tags can be used to group similar events, which will then allow for more focused manual supervised training in a second step, rather than having to go through endless data.

This approach can accelerate the generation of training data for neural networks.

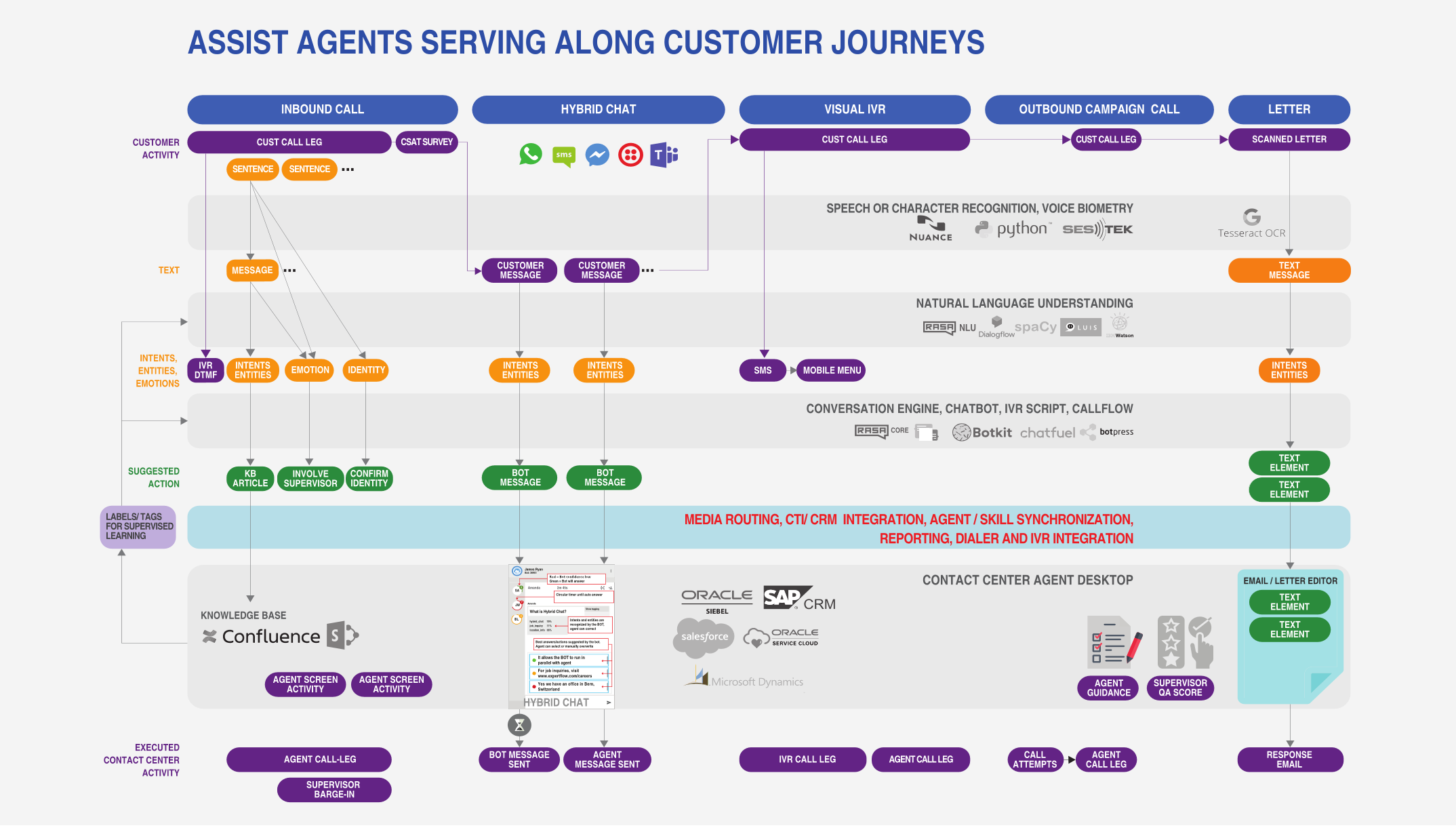

This feedback loop/ tagging is illustrated on the left of the below CIM diagram.

Predicting next actions based on customer journeys

Expertflow CIM groups customer activities into interactions.

A sequence of activities is a predictor of the next appropriate chatbot or agent action.

By feeding a sequence of actions to a Recurrent Neural Network, the next appropriate action can be determined. An example of such an approach in the Chatbot space is Rasa Core, which is trained with “sample stories” (= customer journeys) consisting of “messages” (= activities). This can be generalized to include activities across any media (voice, website navigation history, call tags, forms, chats,…) as a message.

Expertflow’s goal is to capture any activity occurring in the contact center so that companies can build their own dialog model across any channel.

Use AI engines across all channels

It makes sense to build AI models once, and leverage them across all channels. For example, whether a customer says or chats “I want to change my address”, the resulting actions should be the same, and therefore the dialog/ conversation engine should be the same.

Similarly, the NLU layer to extract the intents and entities from “I want to change my address” is the same whether this is spoken, written as a chat message, or in a letter, the meaning is the same. Therefore it makes sense to use the same NLU engine across document management, internal search engines, email analysis, document analysis, chat message analysis. The wider the use case, the more data will be available and the better and faster a good model will be trained.

Vertical AI using large input vectors

It is likely that at some point, AI models will not be limited to one use case or one media only. It might be possible to have a neural network whose unique input vector includes both speech, text or images. The input layers would be different for each media, but the subsequent layers might be in common.

Toda already, speech recognition has a higher performance when combined with an NLU (which tries to make sense of what was said from the text). One can conclude that combining this with a conversation engine (which tries to put everything in context based on what was previously said) will further increase recognition rates.

Vendor independence

Vendors that are active in the areas of CRM, bots, CC, Chat solutions often offer their own proprietary cloud-based chat or bot solution, that can only be used with that particular platform.

Expertflow believes that an ideal solution provides open interfaces to any CRM, Bot, CC or chat solution.

Introduce AI gradually

Our approach is as follows:

- Initially, all conversations happen between humans only. One might, however, choose to position any chat towards a client as a “bot”, even if humans are responding in a Wizard-Of-Oz fashion. This will avoid wrong expectations and disappointments should a bot respond indeed.

- Humans will always an in all stages act as a safety net when AI fails to in a conversation

- AI supports all human interactions by suggesting and facilitating the best actions. This can start with certain channels only (chat, email), but the ultimate goal should be to cover all interactions (voice, video, retail branch..)

- AI should monitor and learn from each human interaction (text and voice), and continuously build a stock of valid conversations, interactions and actions. This learning is initially entirely supervised with manual tagging, but can gradually become semi-supervised or unsupervised tagging. This learning is a continuous, perpetual process, as all companies will evolve in their products and services. This continued learning is the reason why an AI/ data driven approach is appropriate: it can learn implicitly on valid interactions without requiring an explicit (human-) programmed processes.

- If and where AI is sufficiently confident that it has recognized context, intent, and entities from a customer utterance/ email/ letter, AI can be enabled

- to respond autonomously without human interaction, or

- to respond after a certain delay if a human agent hasn’t responded.

This means that AI is introduced gradually and continuously in support of human interactions. AI has to first prove it’s worthiness before being allowed to interact autonomously. There is no “now we do AI” big-bang project as some examples have shown.

What Expertflow provides

CIM is a framework capable of tracking activities from multiple sources, that allows you to embed any AI engine for certain purposes. You own the data, and you can change the AI algorithms at any point in time.

An example use case is Hybrid Chat, and we plan to provide similar vendor-agnostic integrations also for voice, Emails and written communication.

Blending media

What you can already do with CIM is to blend multiple media, for example:

- Run a voice and chat (SMS, FB Messenger, Whatsapp,…) campaign, triggered and controlled via an API from your Marketing or CRM tool

- Start a chat or chatbot from a voice call

- Trigger a voice call from a chat

- Run surveys after calls via SMS or voice, controlled or handoff to a chatbot dialogue.

- Initiate automated callbacks based on certain events